Decision Trees

Decision Making

Create trees

Grow tree until stopping criteria reached (max depth, minimum information gain, etc.)

Greedy, recursive partitioning

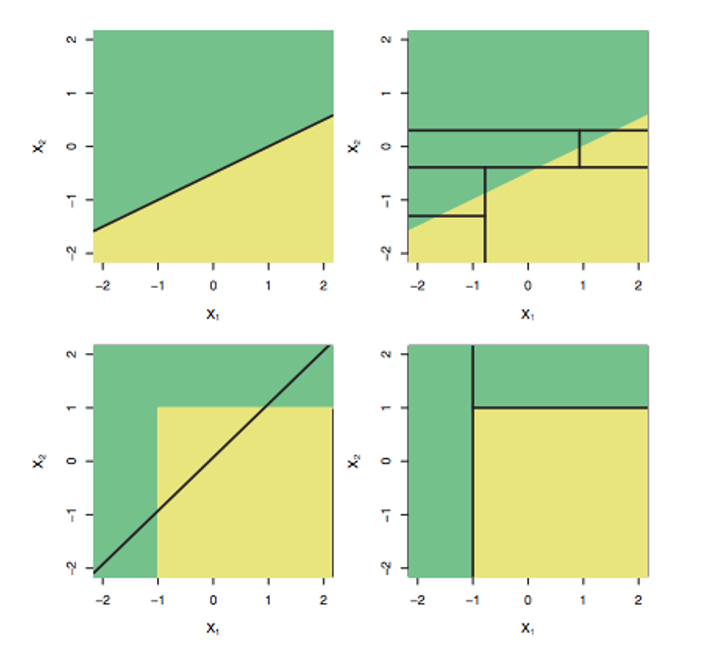

Lines vs Boundaries

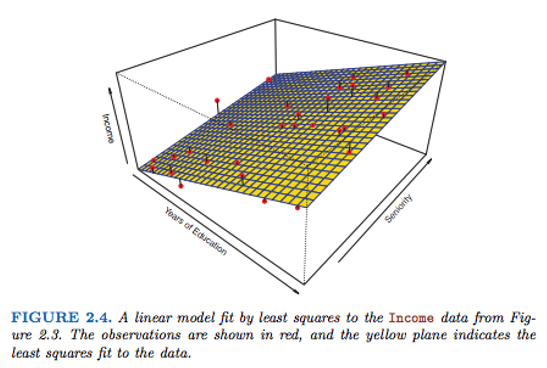

LINEAR REGRESSION

Lines through data

Assumed linear relation

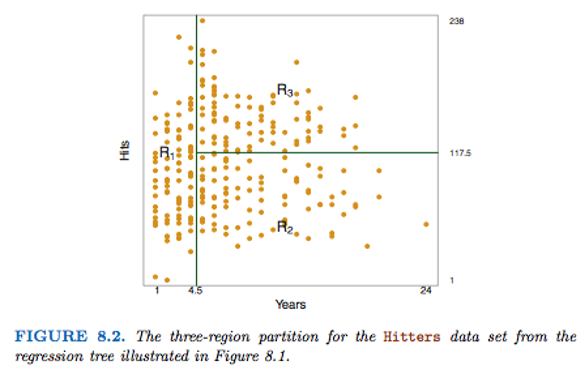

TREE-BASED METHODS

Boundaries instead of lines

Learns complex relationships

Lines vs Boundaries

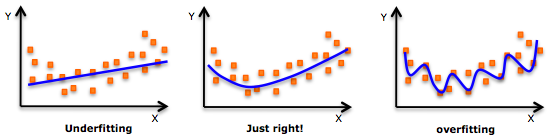

Depth

Depth: Longest path from root to a leaf node

If too deep, can overfit

If too shallow, can underfit

Underfitting vs. Overfitting

Choosing a Restaurant

| Examples | Attributes | Target Wait | |||||||||

| Alt | Bar | Fri | Hun | Pat | Price | Rain | Res | Type | Est | ||

| $X_1$ | T | F | F | T | Some | F | T | French | 0-10 | T | |

| $X_2$ | T | F | F | T | Full | F | F | Thai | 30-60 | F | |

| $X_3$ | F | T | F | F | Some | F | F | Burger | 0-10 | T | |

| $X_4$ | T | F | T | T | Full | F | F | Thai | 10-30 | T | |

| $X_5$ | T | F | T | F | Full | F | T | French | >60 | F | |

| $X_6$ | F | T | F | T | Some | T | T | Italian | 0-10 | T | |

| $X_7$ | F | T | F | F | None | T | F | Burger | 0-10 | F | |

| $X_8$ | F | F | F | T | Some | T | T | Thai | 0-10 | T | |

| $X_9$ | F | T | T | F | Full | T | F | Burger | >60 | F | |

| $X_{10}$ | T | T | T | T | Full | F | T | Italian | 10-30 | F | |

| $X_{11}$ | F | F | F | F | None | F | F | Thai | 0-10 | F | |

| $X_{12}$ | T | T | T | T | Full | F | F | Burger | 30-60 | T | |

Which attribute to split?

Patrons is a better choice because it gives more information about the classification